Architecture for Disposable Systems

Posted on January 15, 2026 • 3 minutes • 599 words

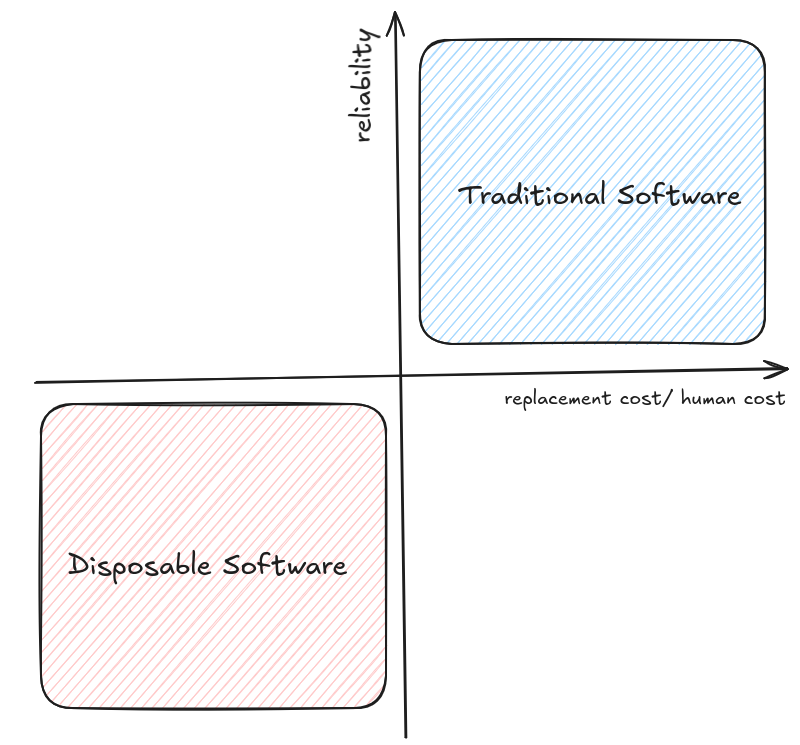

As software gets cheaper to produce (thanks to coding agents) and quality expectations shift, we’re witnessing the rise of disposable software: code that you generate, use, and discard rather than maintain indefinitely.

The Traditional Model

Traditional software follows a well-established pattern: you build something once, maintain it indefinitely, and pay for it through high upfront capital and long-term maintenance costs. The economics made sense because rewriting was expensive. We accepted spending 80% of a project’s lifecycle on maintenance because the alternative (starting over) was often prohibitive (until the product reaches its EOL)

This created a culture of careful engineering: clean code, thoughtful architecture, and refactoring to reduce technical debt. We optimized for the long term because the long term was inevitable. We have to live with it.

The Disposable Shift

But what happens when an agent can regenerate a functional replacement from a prompt in 5 minutes? The incentive to “clean up technical debt” or “refactor for the long term” vanishes. If the code works now and you can regenerate it later, why invest in perfection?

We’re already seeing the rise of “vibe coding”: building tools that solve a problem right now. Need a specific data parser? Generate it. Need a one-off dashboard for a meeting? Generate it. Use it, and if it breaks or becomes obsolete, delete it and generate a new one. You don’t care if the code is “clean” as long as the output is correct.

This isn’t laziness. It’s a fundamental shift in the economics of software development. When generation is cheap, maintenance becomes the expensive option.

Architecture for Disposable Systems

If we’re moving toward disposable software, how do we architect systems that can survive this shift? The answer lies in a three-layer model:

The Core (Durable)

The Source of Truth. This is the hardened, human-written, slow-changing foundation of your system. It contains your critical business logic, data models, and core algorithms. This layer is built to last because it represents the fundamental value of your system.

The Connectors (APIs)

Immutable contracts. These are the interfaces that define how components communicate. They must be perfect because the disposable parts can be imperfect. If your API contract is solid, you can swap out implementations underneath without breaking the system.

The Disposable Layer

AI-generated “glue” code, data parsers, UI components, and integration scripts. This is where the vibe coding happens. Generate it, use it, and regenerate it when needed. As long as it adheres to the contracts defined by the Connectors layer, it doesn’t matter how messy the internals are.

Contract-First Design

The key to making this work is contract-first design. Instead of coding to an implementation, we must code to a strict schema: OpenAPI, gRPC, Smithy, or whatever standard fits your domain. The agent is given the schema as a constraint, and as long as the inputs and outputs match the contract, we don’t care how messy the logic inside the box is.

The critical principle: Immutable contracts. They must be perfect so the disposable parts can be imperfect.

This approach allows you to:

- Regenerate components without breaking the system

- Test contracts independently of implementations

- Evolve the disposable layer while keeping the core stable

- Accept lower-quality generated code because it’s constrained by high-quality contracts

The Future

We’re not there yet, but the trajectory is clear. As coding agents improve and generation costs drop, more and more software will become disposable. The systems that survive will be those built with durable cores, immutable contracts, and disposable peripherals.

The question isn’t whether this shift will happen. It’s whether your architecture is ready for it.